Enterprise Generative AI can't scale without strong semantic layers

Generative AI has taken the world by storm. It's everywhere. I'm currently flat hunting and you can actually search potential ads with natural language.

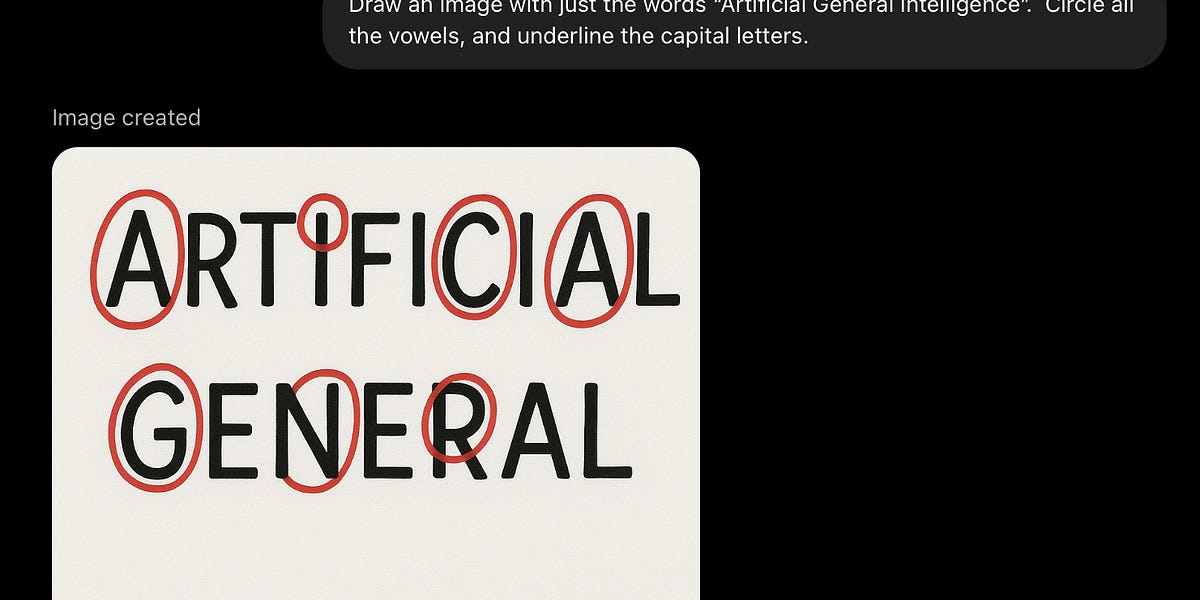

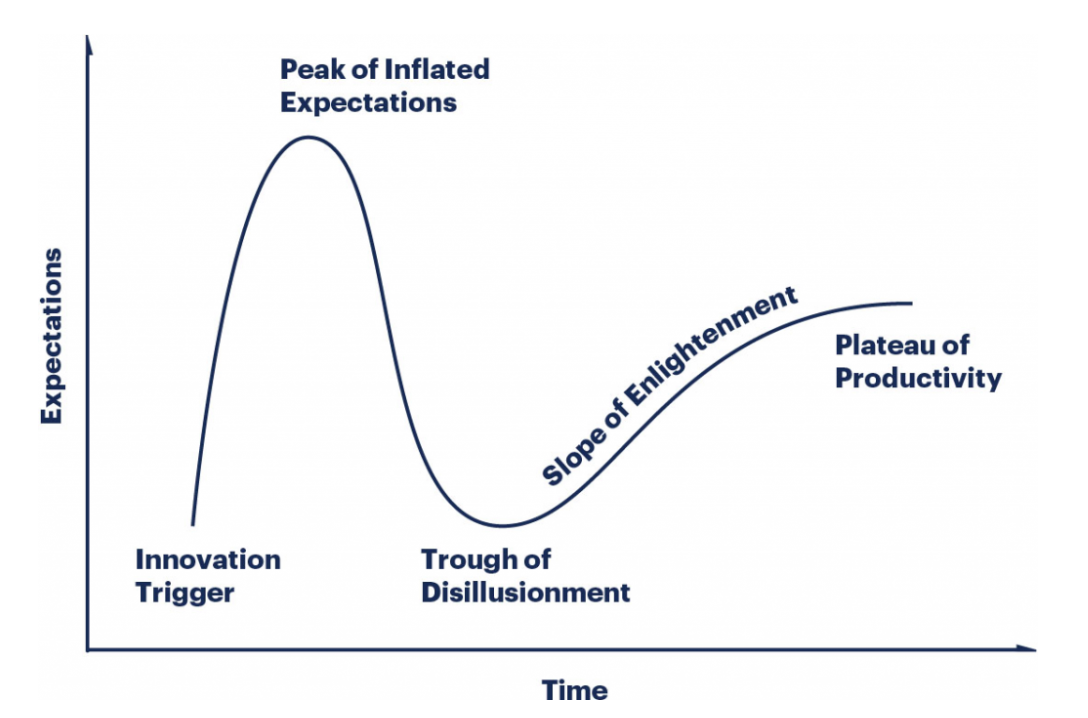

As expected, new and promising technologies get this overinflated attention (though not always unwarranted) which leads to them being applied to anything and everything. This "peak of inflated expectations" is where we most likely are (c.f the disappointment expressed after release of GPT 5).

MIT recently released a report stating that 95% of generative AI pilots fail to deliver revenue acceleration. This isn't new, before LLMs business struggled to get data science to deliver value. AI relies more heavily on data to be effective than machine learning and data science for a few reasons:

- data science and ml engineering can be done offline, so though it may not scale, it's still possible for a skilled data scientist to get insights from noisy datasets

- AI engineering is online, thus optimising the system for a specific need is done in a more superficial manner (i.e. via prompting) and the danger comes from the fact that these models can still deliver results and appear to be functional while potentially delivering low-quality results.

The signs are already there: AI search has much lower performance than traditional search, LLMs are prone to hallucinations. There is a tremendous opportunity for increase productivity, but there's one thing that these models cannot replace (at least for now): which is trust. Highly trained professionals, though imperfect, are the ones commanding trust and authority. LLMs being deployed in digital products in particular can lead to a breach of trust: an example is Duolingo who faced backlash after announcing their new pivot to AI. Are we at the peak of inflated expectations or through the disillusionment? I believe we're somewhere in between.

Product leaders integrating LLMs into their products must proceed with caution. Of course there's a risk of being left behind so moving fast and making AI features available is important, but given the current phase that we're in, there's a few ways to do this in a thoughtful manner.

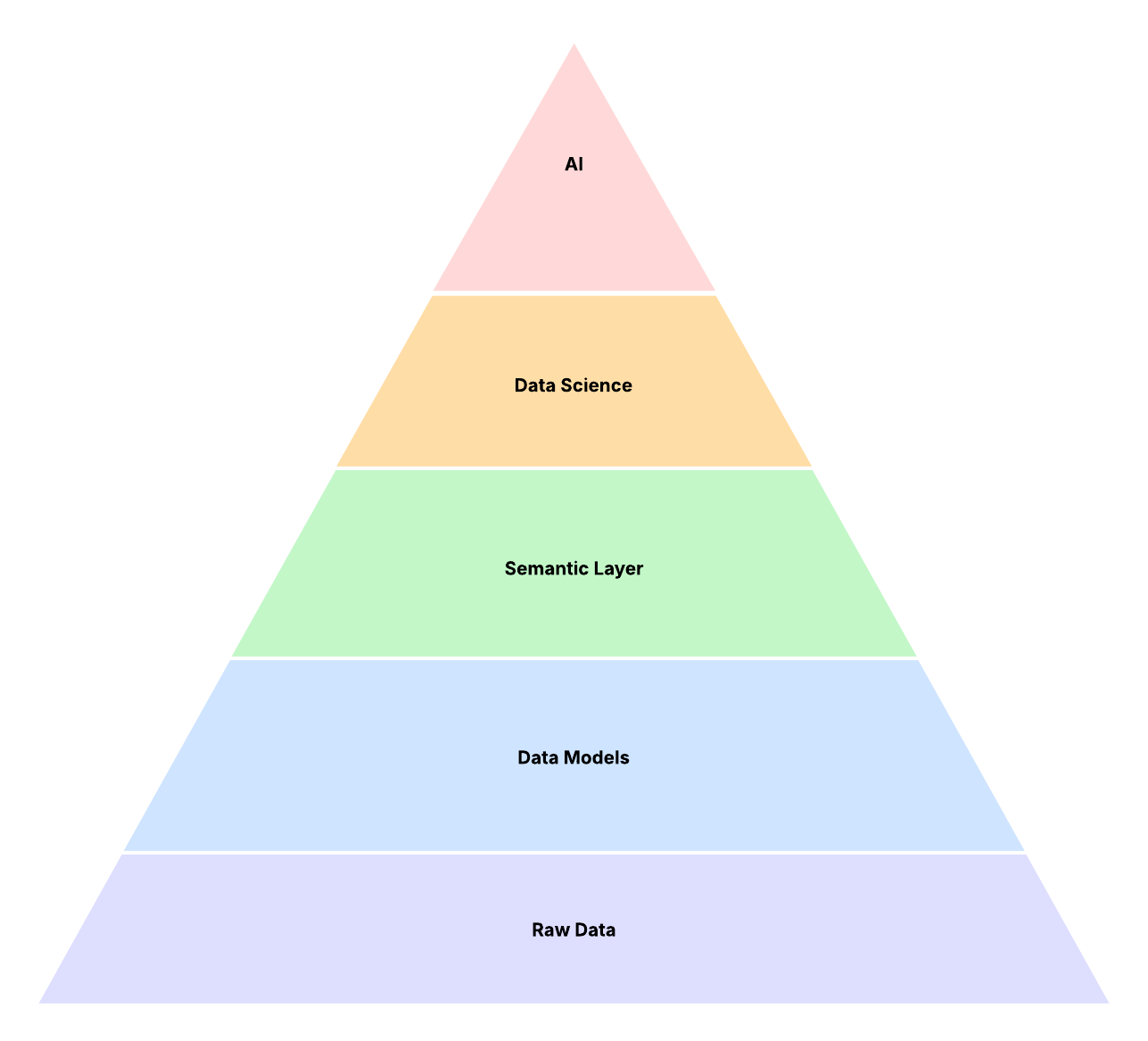

Pyramid of Data Needs

Data science and AI are the more advanced data needs. Building AI and data science functions without solid data foundations will lead to all sorts of pain down the road. Though not every company has strong data foundations, this is no way saying that they shouldn't embed AI or even start their data science journey. It means that the AI or data science features they start with should be pilots into that build the minimum viable set of components for each of the data needs outlined below.

Minimum Viable AI feature

The lean product principles still apply. What are the minimum viable features you should implement? The common usage could be assisting customers in using the platform for increased productivity (finding information, or running a complex workflow) either via simple prompting or via a chat interface. This is to me the MVAIF for most product companies (it is what my favourite rental property search app developed).

Retrieval Augmented Generation

LLMs have static memory and are trained on generic datasets so they have no awareness of your business context (this can, to a certain extent, be passed via your system prompt). In order to provide this data to the model, data needs to be indexed and made accessible within the execution context. RAG is, as of now, the gold-standard.

RAGs can be developed as standalone pieces of infrastructure, however, when developed on top of a semantic layer, it fast tracks indexing the relevant data. Just like semantic layers reduce time to insights, it does reduce the time and effort needed to index the data needed by the LLM.

For business applications, results accuracy are fundamentals to maintain user satisfaction and trust. Though LLMs have the potential of creating delightful user experiences, they cannot achieve this result as is. Business data needs to be made available within the execution context. At scale, RAGs built on top of semantic layers allow to update this data effectively and help update the LLM knowledge as this data evolves. Companies need strong product leadership just as much as they need AI and ML leadership to help them navigate how to leverage these new technologies. Thoughtful introduction of AI and ML delivering value is possible. There are no shortcuts to get there.

Resources