Evaluating engineering productivity in the age of AI

There is so much noise about the emergence of coding leveraging the latest AI advancements. I do think that a lot of it is very reactionary, some are quite sober takes. I've always been skeptical about hype because I'm a firm believer of the precautionary principle in decisions that have long term impacts, as the wide ranging ramifications can only be understood after years.

A complex topic

Developer productivity is a complex topic and sometimes a bit of a taboo in engineering because if they are not properly used, can have adverse effect:

- Metrics can be gamed

- Metrics don't tell the whole story (not all code contributions are equal)

One of the reasons for this is that developer productivity is multi-dimensional. This requires to monitor multiple metrics. I believe that tracking overall engineering organisation productivity or individual teams productivity provides more reliable information. My view on this is that individual productivity metrics are extremely noisy, thus macro look (team, division, org) allows to get a more precise measurement of the average engineer productivity (law or large numbers). An averaged number that also averages out noise, is a better decision-making tool. Moreover, drastic changes in metrics at this level of observation, are unlikely to be due to a single developer. So addressing problematic changes can be done via processes, strategy, roadmap or culture changes. While engineering managers, who are closest to the action, can judge on individual developer productivity using a mix of quantitative and qualitative evaluations.

AI impact on engineering productivity is multi-dimensional

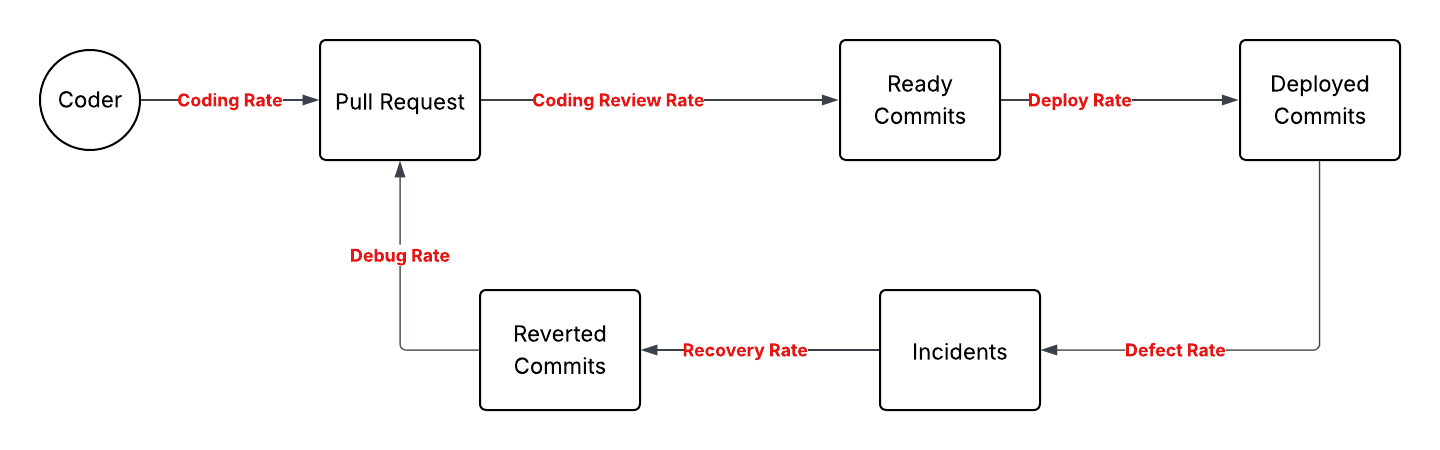

As developer productivity is multi-dimensional, system thinking is an appropriate framework to understand its dimensions. I recently started reading An Elegant Puzzle, Systems of Engineering Management by Will Larson and I'm particularly found of his system diagram for engineering productivity

Looking at this diagram, the introduction of AI coding assistant such as Cursor, Copilot or Windsurf, will have a positive impact on the coding rate. And I believe this is a great improvement, but it's only part of the story. If you are producing 5x the amount of pull requests, are you really able to review 5x the amount of pull request with the same attention? What about 10x, 20x? I believe that, unless, an organisation plans for an increase in coding rate, they will be faced with either bottlenecks because the downstream processes cannot keep up with the increase, or they will experience a decrease in code quality to match the rate coding rate increase:

- if you produce more code, you need to review more code

- if you approve more code, you need to release more code

- if you release more code, you are have a system that evolves faster that you need to keep up with and manage incidents of

- if you have more incidents to manage, you have more work to do to recover your systems

At this stage of maturity, if coding rate increases quickly enough without any significant change to the downstream release process, AI is merely supporting the addition of technical debt.

Opportunities

This is still quite early, and as any other technologies, the hype with die down and genuine productivity will emerge (I believe so). As of now, improvements of engineering productivity are overestimated.

I believe it is because:

- there's still quite a lot of hype which tends to create overoptimism

- the introduction of AI fails to address engineering productivity holistically.

What we are experiencing is just the beginning, and as adoption increases and the space matures, I believe we'll see advancements that tackle code review, deployment and incident management.

References